Staying on Top of the Wave

I used to work for a computer researcher who didn’t actually use computers. Academics can sometimes be quirky, you know? He just had ~/.forward set up to print every message (on paper) as it came in. One problem with this setup was that people would send him résumés as PostScript (and later PDF) attachments. The printing system would print these out as plain text and use up all of the paper in the printer if someone didn’t catch it.

This raised a question for someone:

Why would PDFs print as plain text and how could you set your printer up to do that?

I’m so confused by that last sentence and tried readingbitba different way to make sense of it. I still don’t get what you mean.— ?? jean farouche????? (@JFarouche19) March 10, 2021

and I realized that the nightmare that printing under Unix is rather less nightmarish these days. The ubiquity of PDF means that I don’t really have to worry about converting files to the proper device-dependent format. Line printers are gone, so I don’t have to worry about the difference between printing text and printing graphics. Unicode means I don’t have to worry about whether a document is in English, French, or Russian.

Of course, all of this convenience comes at a cost. For instance, Unicode is a complex soup of characters, codepoints, encodings, and normal forms. When I was learning C, way back when, in between mammoth hunts and rubbing sticks together to make fire, everything was US English, in ASCII, and a letter was a character was a byte was an octet.

This may have been limited, but at least it made things easy to learn. Which made me wonder about people wading into the field these days: how do they learn, when even a simple “Hello world” program has all this complexity behind it?

There are two answers to that: in some cases, say Unity or Electron, tutorials will typically give you a bunch of standard scaffolding — headers, includes, starter classes — and say “Don’t worry about all that for now; we’ll cover it later.” In other cases, say, Python, a lot of the complexity is optional enough that it doesn’t need to be shown to the beginner.

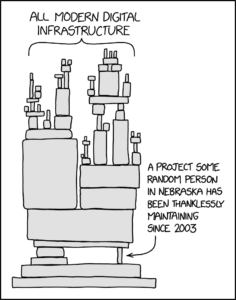

New people are coming in at the top of this graph. I came in a bit further down, but my entry point was already on top of a bunch of existing work by other people. After all, I got to use (complicated) operating systems instead of running code on bare metal, text editors rather than toggling my code in on the front panel, and much besides. People starting out today are wading in in the middle, just like I did, just at a different point.

And this is what we humans do. When Isaac Newton talked about standing on the shoulders of giants, this is what he was talking about. We build tools using existing tools, and then use those new tools to build newer tools, changing the world as we go.

Of course, new technology can also disrupt and undermine the old way of doing things, and there’s a persistent fear of AI taking away jobs that used to be done by humans, just as automation has. Just as dockworkers now use forklifts to load cargo rather than carry it themselves, companies now use chatbots to handle the front line of customer support. Machines have largely supplanted travel agents, and have even started writing press releases. What’s a poor meat puppet to do?

I keep thinking of how longshoremen became forklift operators: when new technology comes along, we still need to control it, steer it, decide what it ought to do. I used to install Unix on individual computers from CD, one at a time. These days, I use tools like Terraform and Puppet to orchestrate dozens or hundreds of machines. And I think that’s where the challenge is going to be in the future: staying on top of new technology and deciding what to do with it.